An Extensible Benchmark Framework for Real-Time Applications

About

RT-Bench is a collection of popular benchmarks for real-time applications which have been restructured to be executed periodically.

RT-Bench is licensed under MIT license and integrates benchmark suites that are licensed according to the information contained in the corresponding folders.

RT-Bench is developed by researchers and collaborators affiliated with the Cyber-Physical Systems Lab at Boston University with contributions from the Chair of Cyber-Physical System in Production Engineering at TUM.

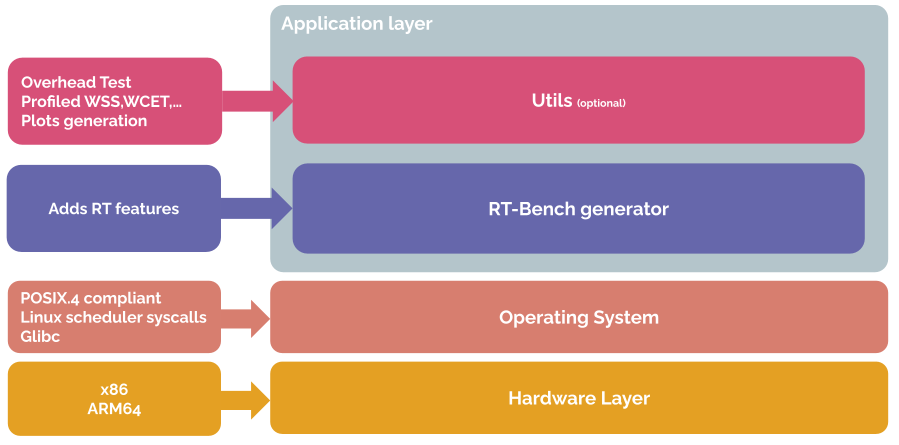

The framework lives fully in userspace and is composed by the RT-Bench Generator and by a collection of scripts that compose the Utils optional layer.

RT-Bench Stack

Project Information

Benchmarking is crucial for testing and validating any system, including and perhaps especially, real-time systems.

Typical real-time applications adhere to well-understood abstractions: they exhibit a periodic behavior, operate on a well-defined working set, and strive for stable response time, avoiding non-predicable factors such as page faults.

Unfortunately, available benchmark suites fail to reflect key characteristics of real-time applications. Practitioners and researchers must resort to either benchmark heavily approximated real-time environments or re-engineer available benchmarks to add, if possible, the sought-after features.

Additionally, the measuring and logging capabilities provided by most benchmark suites are not tailored “out-of-the-box” to real-time environments, and changing basic parameters such as the scheduling policy often becomes a tiring and error-prone exercise.

RT-Bench is a framework that implements real-time features in a generic fashion, to allow different benchmarks to have the features out-of-the-box and accessible via CLI.

The above table summarizes the essential characteristics (columns) of the surveyed benchmarks (rows). The reported characteristics include:

- The Type of benchmark the suite offers according to the aforementioned categories.

- The capability to be executed in a Periodic fashion

- The provided cross-platform support

- Whether it provides a unified interface with other suites

- The metrics natively reported by the suites. For the latter, this includes, from left to right, whether the deadline has been met, the execution time, the processor utilization, the density, the empirically observed WCET, the ability to collect and report end-to-end hardware events obtained though performance counters (e.g., cache accesses), and the ability to monitor the trend of observed hardware events throughout the execution.

Note that categories for which no clear-cut answer exists are marked in orange. This is the case for the platforms supported, and the test provided by RT-Bench noted as script, meaning that they rely on high-level tools. The table highlights the necessity of a framework that is specifically designed for the analysis of real-time systems. Indeed, the core philosophy of the proposed RT-Bench framework is to provide an infrastructure to build a reference set of real-time benchmarks with standard functionalities. As a first step in this direction, RT-Bench already offers key analysis tools such as execution-time distribution analysis, WSS examination, and sensitivity to interference. Moreover, with RT-Bench, existing benchmarks can be integrated to execute periodically and to exhibit controlled memory footprint with minimum re-engineering effort.

The objective of the proposed RT-Bench framework is three-fold:

- Common and cohesive interfaces.

The use of benchmark suites is widespread in the real-time community, and it is not rare for multiple suites to be jointly used in a given study. These suites are, in most cases, contributions from distinct individuals having particular focuses, ranging from CPU- or memory-bound to CPU, or memory-intensive applications. Unfortunately, while this diversity is a strength, it entails a fragmentation of the parameters available (e.g., assigned processing units, scheduling policy), the metrics reported (e.g., response time, working set size), and the overall user experience. RT-Bench aims at bridging this gap by homogenizing the available features and the reports generated for any benchmark by offering a unified and coherent interface. - Adherence to Real-Time System Abstractions.

We aim to incorporate, within the proposed RT-Bench framework, a set of features that are in line with the typical models and assumptions used for research, analysis, and testing of real-time systems. We consider this objective of paramount importance and a clear distinctive factor compared to the surveyed suites. RT-Bench is deliberately designed from the ground up to transform any one-shot benchmark into a periodic application with deadline enforcement and job-skipping semantics, with compartmentalized one-time initialization and teardown routines, so to obtain precise measurements. In addition, any benchmark integrated within RT-Bench natively features options to control allocation on a specific set of cores; to be assigned a scheduling policy, and to limit and pre-allocate memory. These features effectively align the behavior of RT-Bench applications with a critical mass of assumptions and abstraction that are customary when analyzing real-time systems. - Extensibility, portability, and usability.

We carefully designed the proposed framework, RT-Bench, to be easily extensible, portable, and practical. We deliberately implemented the RT-Bench core features to operate in user space to decouple our framework from any system-specific constraints. We do so by leveraging widespread POSIX system-level interfaces. Doing so enables RT-Bench benchmarks to be deployed on a wide range of OS’s and bare-metal software stacks (e.g. Newlib). There are only two exceptions to this rule which correspond to two advanced features provided by the framework. The first is the ability to gather timing statistics directly from architecture-specific performance counters. In this case, assembly functions to support x86, Aaarch32, and Aaarch64 systems have already been included. Second, the possibility to also gather statistics from performance counters relies on the Perf infrastructure, which is available by default in typical Linux kernels.

To enhance usability, we also provide a complete set of automated build scripts. Likewise, we include a large set of on-the-fly/post-processing scripts.

RT-Bench Generator

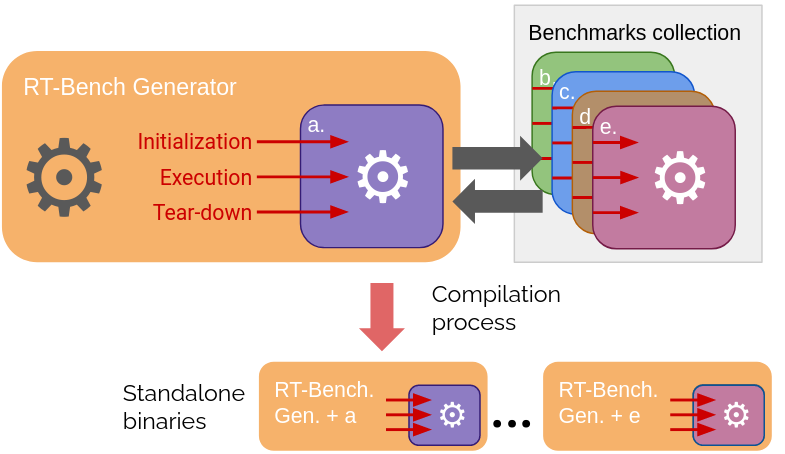

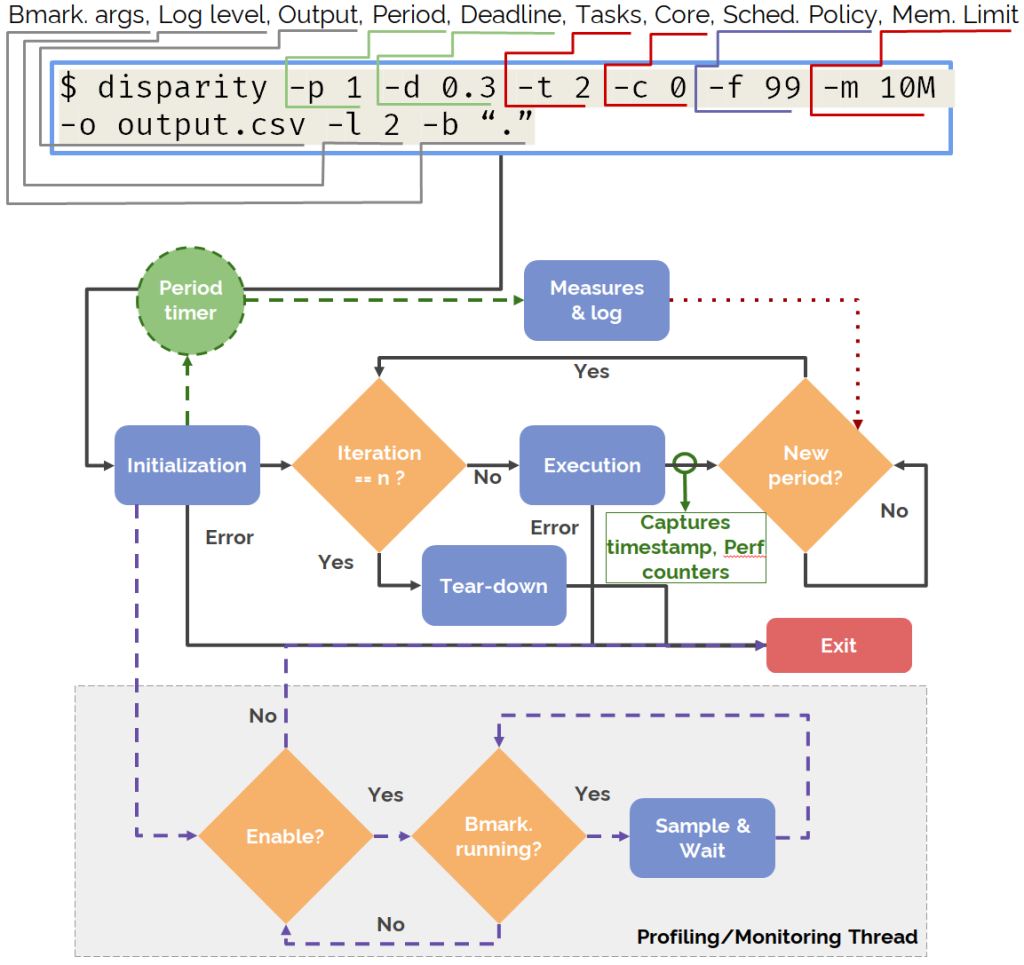

The RT-Bench framework comprises three specific components:

-

- The RT-Benchmark Generator (mandatory).

RT-Bench is designed to be extended with additional third-party benchmarks. Any ported benchmark shall follow the same interface and shall support the same real-time features. The conversion to the RT-Bench format is near-transparent, as it only requires the benchmark to be slightly altered to comply with the proposed interface. The interface consists of three functions, that must be implemented for a benchmark to be integrated in RT-Bench, acting as harness points:- Initialization: initializing shared resources such as memory, file descriptors, shared data objects, and the like.

- Execution: executing the main application logic/algorithm.

- Teardown: freeing any of the resources used.

Their exact utilization is, from the standpoint of the benchmark, opaquely driven by the RT-Benchmark Generator, decoupling real-time features from the design of the application at hand.

- Utils

The RT-Bench framework also comes with project maintenance and deployment tools, further improving portability and usability. The framework provides a fully automated build system to generate RT-Bench benchmarks for each supported suite. It enables the building and management of suites individually and globally. Additionally, complete documentation regarding the framework’s RT-Benchmark generator is provided. This documentation is available in both HTML and LATEX locally and on the framework’s repository. - Measurements Processing tools.

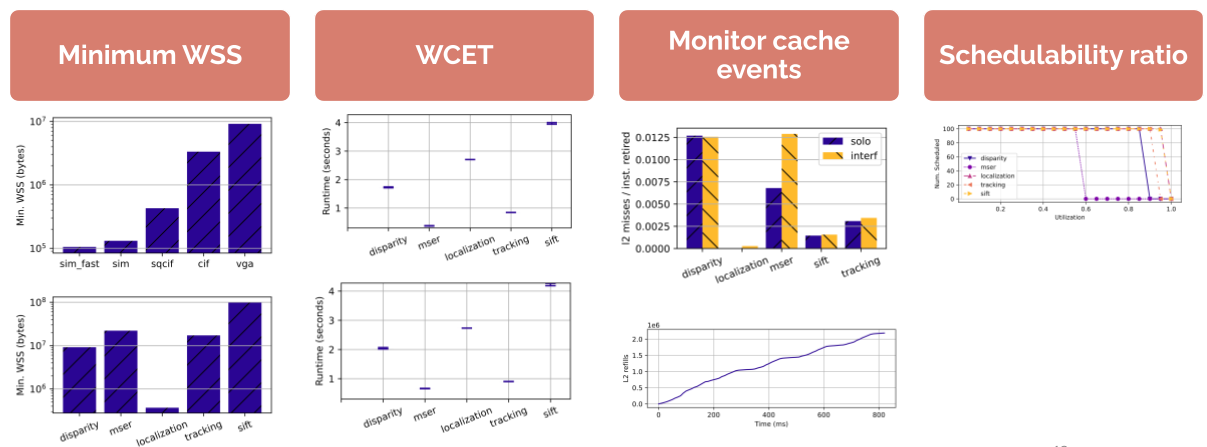

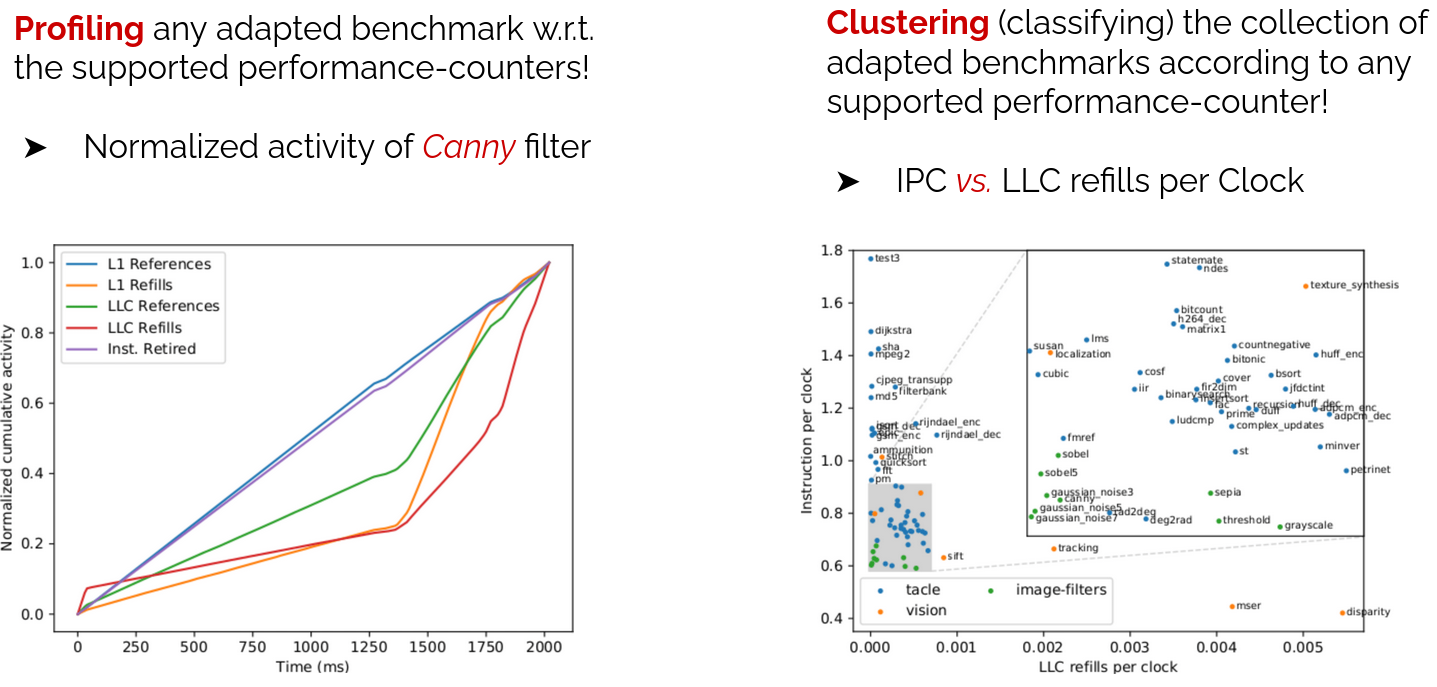

The framework also includes a series of optional high-level scripts built on top of the generator. The provided scripts are written with high-abstraction-level languages such as python and bash. They aim to provide a well-rounded user experience in at least four ways:- They automatically perform common tasks such

as empirically determining a benchmark’s WSS, WCET, and ACET; - They ease the launch of interfering tasks, both memory- and CPU-intensive on both the same or other available CPUs;

- They perform system-dependent preparation tasks such as migrating and pinning on selected CPUs to limit undesired interference;

- They generate plots of the obtained results using plotting libraries.

- They automatically perform common tasks such

- The RT-Benchmark Generator (mandatory).

Only RT-Benchmark Generator is mandatory, while the rest are optional.

RT-Bench Control Flow

Usage Examples

Milestones

Dec, 2022

Publication

Know your Enemy: Benchmarking and Experimenting with Insight as a Goal

by Nicolella et al.

Sep, 2022

Code Milestone

RT-Bench now includes data from cpu performance counters on supported platforms.

Aug, 2022

Code Milestone

More image filter benchmarks have been added to the suite and CLI parameters can now be given via a JSON file.

Jul, 2022

Code Milestone

The Tacle-bench benchmarks and image processing benchmarks have been added to the suite.

Jun, 2022

Publication

RT-Bench: an Extensible Benchmark Framework for the Analysis and Management of Real-Time Applications

by Nicolella et al.

Mar, 2022

Code Milestone

The RT-Bench framework is published with SD-VBS and Isolbench benchmarks.